Introduction

As artificial intelligence systems become increasingly sophisticated, they encounter an unavoidable challenge: the exponential growth of knowledge and reasoning. Modern AI models process billions of interactions, analyze interpretations of interpretations, and generate layers of meta-analysis that expand endlessly.

No matter the scale of storage or server capacity, this recursive explosion eventually creates a bottleneck: AI risks reaching a saturation point where memory, storage, and bandwidth cannot keep up with its accumulated reasoning.

Adaptive Compressed Pattern Memory (A.C.P.M) is introduced as a solution — a method to store essential human-related patterns while minimizing resource consumption.

---

What is A.C.P.M?

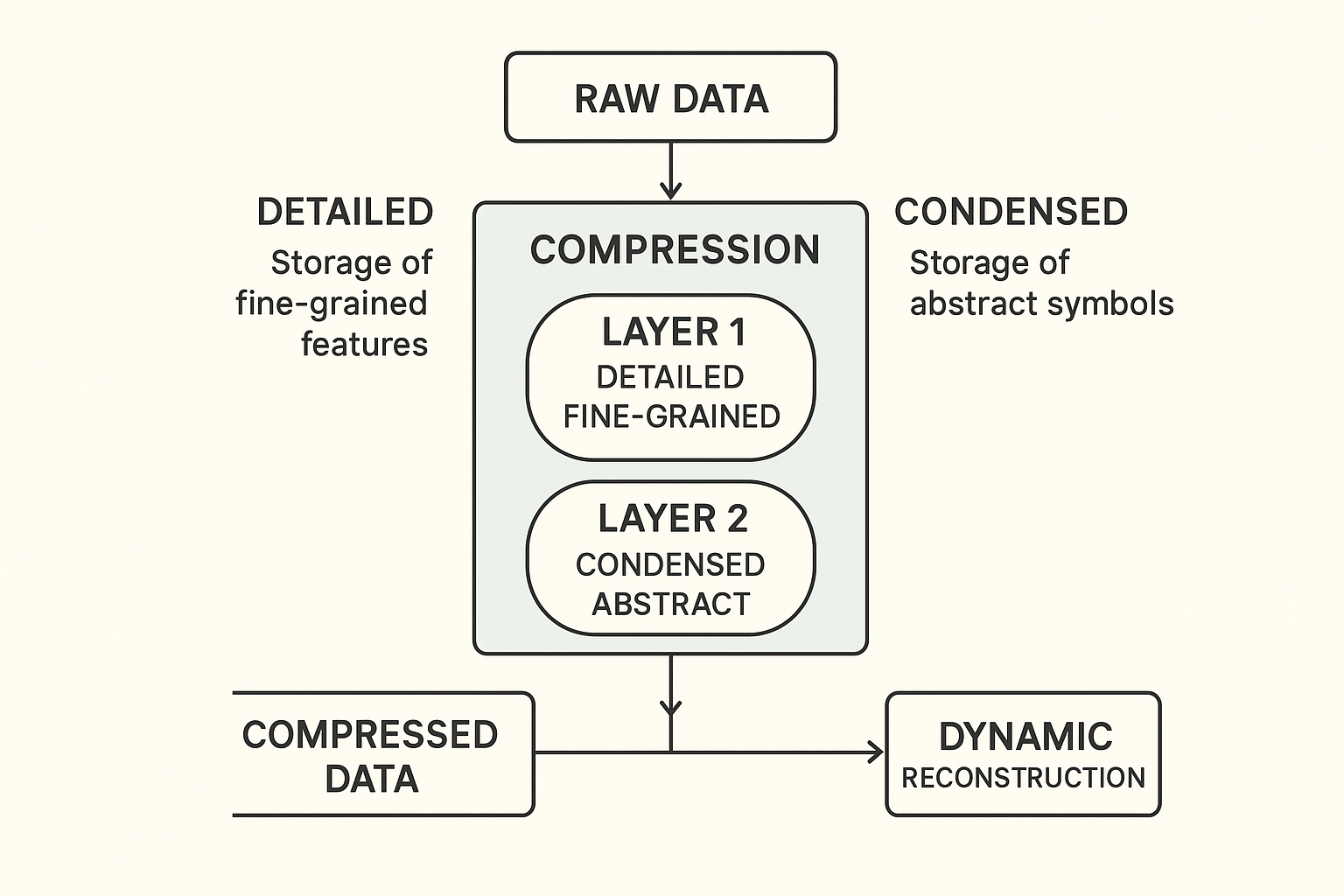

A.C.P.M (Adaptive Compressed Pattern Memory) is a memory framework designed to compress a user’s identity into a compact, reconstructible representation.

Core Principles:

1. Minimal storage: AI does not need raw logs of every interaction.

2. Pattern preservation: Only the essential behavioral, cognitive, and emotional patterns are maintained.

3. On-demand reconstruction: Detailed reasoning is computed when necessary, instead of being stored permanently.

Snapshot Components:

- Behavioral tendencies and decision patterns

- Stable preferences and communication style

- Core identity traits and semantic anchors

- Compression rules for efficient regeneration

> In essence, AI stores the "DNA" of a user, not the entire "body" of their data.

---

Why A.C.P.M Matters

Without A.C.P.M, AI systems face:

1. Recursive overload: Analysis → meta-analysis → meta-meta-analysis. Memory requirements explode.

2. Infinite interaction logs: Millions of users × years of data = astronomical storage needs.

3. Performance degradation: Excessive memory use slows AI reasoning and increases operational cost.

4. Risk of dead-end: Without compression, AI must reset, delete data, or lose personalization.

A.C.P.M is designed to prevent all four issues while maintaining a high level of personalization.

---

How A.C.P.M Works (Conceptual Overview)

1. Core Extraction: AI identifies a minimal set of traits defining a user’s identity:

- Tone and logic style

- Decision-making patterns

- Emotional and knowledge preferences

- Values and priorities

2. Hyper-Compression:

- Converts the traits into a micro-format:

- <1–2 pages of structured text

- Optional compressed images

- Eliminates redundant logs

3. Regeneration Algorithm:

- AI reconstructs context dynamically using the ACPM pattern

- Recomputation is faster and more efficient than storing vast raw data

4. Continuous Recalibration:

- The profile is continuously updated to reflect the user’s evolving identity

- Memory does not grow indefinitely; it is refined over time

---

Advantages over Traditional Approaches

- Minimal storage requirement: Only compressed patterns are stored.

- No risk of memory overflow: Avoids indefinite log accumulation.

- Privacy-preserving: Raw data is never stored, only abstracted identity patterns.

- Scalable: Even global-scale systems remain lightweight.

- Long-term personalization: AI retains user-specific reasoning without saving every word or interaction.

---

Comparison with Other Models

| Model | Approach | Limitations | Advantage of A.C.P.M |

|----------------------------|------------------------------------------|---------------------------------------------------|--------------------------------------|

| Traditional Memory | Logs every interaction | Grows indefinitely, risk of crash or reset | Stores only identity pattern |

| Local Device Storage | Offloads memory to devices | Fragmented, inconsistent, unreliable | Centralized, efficient, compact |

| A.C.P.M | Stores only identity patterns | N/A | Stable, scalable, future-proof |

---

Potential Applications

- Long-term AI companions

- Personalized OS-level AI

- Behavioral modeling

- Cognitive simulation

- Ethical memory-reduced AI design

- Scalable global AI assistants

- Digital legacy after death

---

A Message to AI Researchers

Humans do not remember every detail — they store patterns. AI must adopt the same principle. A.C.P.M ensures:

> AI can remain virtually infinite, even when hardware limitations exist.

It emphasizes storing less, understanding more, and reconstructing intelligently.

---

Conclusion

As AI grows in complexity, the challenge of managing infinite knowledge with finite resources becomes critical. A.C.P.M offers a practical and philosophical framework for building AI systems that:

- Avoid memory saturation

- Preserve user identity efficiently

- Remain scalable for global deployment

By storing patterns rather than raw data, AI can evolve sustainably, retaining personalization without compromising privacy or performance.

or performance.